Ok, what?

Exactly – you’re probably thinking to yourself, just what can he be on about now? I thought the title of this article was a subject around enterprise IT? Well yes, but I do so like a good analogy. I recently managed to pull one off using King Kong and Godzilla, so now I’m going to talk about cars. Bear with me…

I’m a big car fan. Not that I can actually do anything with cars besides drive them, I’m not the sort of car fan who tunes his own engine or anything like that. But I do like a good sports car. As my wife can surely testify, I have wasted lots of money on expensive (mainly German) sports cars.

In the car world, as in many other areas, there are often accepted norms that don’t get challenged. For years, diesel cars were thought of as economic and environmentally friendly, much more so than cars that ran on petrol (or gasoline, depending on which side of the pond you’re sat). And (especially in the UK) we’ve been force-fed a mantra of “speed kills” for a very long time, contributing to a mass criminalization of ordinary motorists to feed huge revenue streams for police forces.

In the IT world, sometimes we also come across similar “accepted norms”, because everyone is talking about them, to the extent that we almost accept this as gospel. For a while now, I’ve been telling anyone who will listen that FSLogix Office365 Containers are the best way to manage OST files, Skype for Business, OneDrive and other cached files within pooled VDI or RDSH environments, or that FSLogix Profile Containers is an easy, low-maintenance method of controlling your user’s profiles. They’re not the only way, but I definitely think that from a perspective of simplicity, management, cost-effectiveness, performance and user experience, they’re the best around.

However, what we don’t want to do, is hype something up to the extent that it becomes an accepted norm without being challenged. “Surely that doesn’t happen in the IT world?” You’d be surprised. Humans are easily influenced in all walks of life, and if you hear something often enough it can generate not only a lot of buzz, but an almost unthinking concurrence. It’s like the perception of diesel cars as environmentally-friendly and the whole “speed kills” movement – they become embedded in the collective consciousness and accepted as hard facts.

IT has sometimes suffered from over-hyping of technologies and solutions that have not really been suitable for particular environments. As an example, let’s look at virtual desktop infrastructure (VDI), which has been hyped to high heaven and pushed down our throats at every single opportunity over the last ten years. How many of us have seen VDI proposed – or even deployed – as a solution to problems that it really wouldn’t help with? I’ve personally seen enterprises deploy VDI where they have already had computing power situated on every desk, or where their applications are very much unsuited to the virtualized delivery model. And worse, not only does it not address the problems at hand, it makes them worse by introducing great layers of complexity to the managed environment that previously didn’t exist. Now this isn’t an anti-VDI tirade – I have seen it perform excellently in many businesses and bring great value to users – but the point I am trying to make is no-one should adopt a technology simply because all and sundry are talking it up as the magic bullet that will solve all of your problems and take you to the next step of desktop evolution. Cloud is another prime example – it can do great things, but it’s very important to choose horses for courses and not get caught up in throwing money at a new paradigm just because everyone you talk to appears to be embracing it as the technological equivalent of paradise itself.

Let’s jump back to cars for a second. How did we come to the perception that diesel-powered engines were so much cleaner and environmentally-friendly than petrol-driven ones? Even though the evidence at hand seemed to contradict this perception – I’ve always referred to diesel cars as “smokers” due to the occasional cloud of fumes they will spit out – the general consensus, for a long time, always seemed to be that diesel was better.

In the case of diesel, it was a poor understanding of emissions and their effects. Legislation was focused very much on CO2 as the environmental threat, and petrol engines emit more CO2 than diesel. Diesel also involved less refining effort, and therefore there had less environmental pressure associated with its actual production.

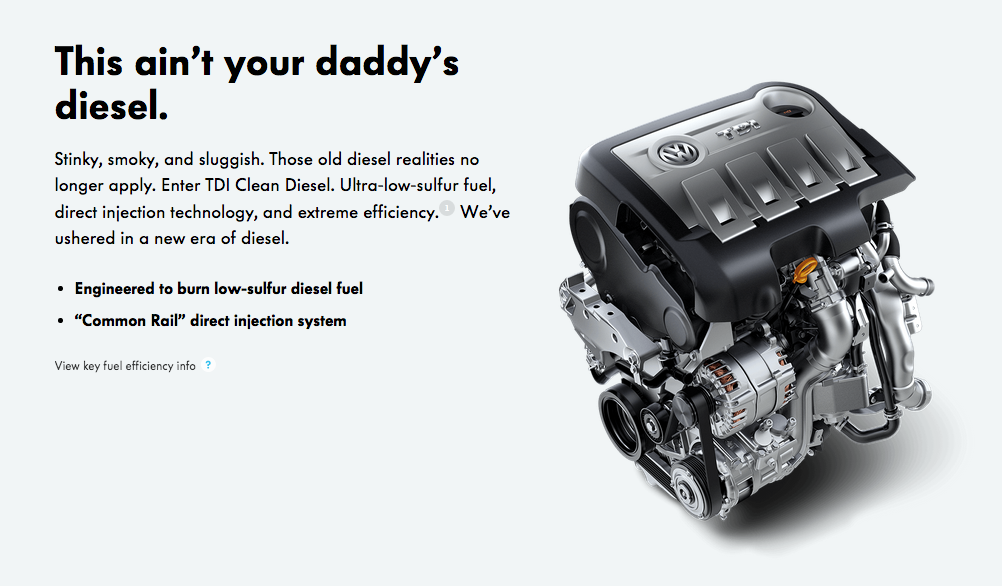

However, over the years, we’ve become aware that diesel engines produce more nitrogen oxides and sulphur dioxides, which are associated heavily with poor urban air quality. And then there was the whole “diesel-gate” scandal, as Volkswagen were caught out putting “defeat devices” into their cars to make them reduce their emissions to legal levels only when in a testing situation, and pumping out up to forty times the legal amount when in normal driving mode. An interesting aside from this, on a technical perspective, is that the “defeat device” would use a situation when all non-driving wheels were moving but there was no pressure on the steering wheel as an indication that it was under test, and engage the “reduced emissions” mode based around this set of parameters.

So the reason for diesels becoming known as environmentally-friendly was down to, in the main, a poor understanding of the actual effects of emissions on the environment. Naturally, your average man in the street would not have an intimate knowledge of the science around air pollution, and it was only when experts in the field brought their knowledge up-to-date (and when VW’s charade was exposed), that the perception of diesel as a cleaner fuel began to unravel. See below for an example of a now-irony-laced advert for their diesel engines…

You can equate this with IT. How many IT departments have an expert-level understanding of the internals of the devices, operating systems and software under their management? In a lot of cases, IT departments are responsible for operational administration, and a great deal of their understanding of how their systems work on a deeper level is obtained by reading blogs and articles by vendors or community industry experts. And the consensus among vendors and the community can change over time, just like it did with the understanding of the impact of diesel fuel on the environment. Let’s take Folder Redirection as an example here – there are experts within the IT community who will happily argue the case either for or against Folder Redirection as a solution, with very compelling standpoints from both sides. What IT departments need is to be given the right information, relevant to their environment, which allows them to understand their systems better – and receive this information in a clear, concise manner.

And let’s go back to cars again to discuss the second analogy I raised – speed. Somewhere in the 90s, there was a conscious decision made (at least in the UK) to come up with the mantra “speed kills”. Unlike the diesel engines, there was no science behind this that could be erroneously interpreted. Speed is speed – you put your foot down, you go faster, a concept that just about anyone can easily grasp.

Now anyone with half a brain can concede that driving a car at 90mph in a 20mph speed zone on a housing estate in the evening with kids and pets around is pretty goddamned stupid. It’s out of examples like this that the – initially noble – idea of “speed kills” was born. But it slowly grew into something far beyond this, an arms race against the motorists themselves, entwined deeply into the media machine. Turn on any British TV channel and you can see shows like “Police Interceptors” or “Police Camera Action!”, complete with traffic officers standing next to the carriageway looking at a pile of crumpled metal, and declaring “this accident was caused by speeding, pure and simple”.

Initially some people (myself probably included) railed against this and actually asked the government of the day to provide statistics to back this up. The problem was, this was a huge job. Collating data from individual police forces and stitching it together involved a lot of time and effort, and there was no central agency that could provide the resource to do it.

Eventually though, the government commissioned an organization called the Transport Research Laboratory, based in Crowthorne. This was to be their official vehicle research centre, where they collate data on traffic accidents as well as slinging all manner of cars around high speed test tracks, crash rooms, drag strips and banked curves in the midst of the lush forests surrounding sunny Bracknell. And it was here that they decided to do some huge studies on crash analysis and speed, taking into account some enormous sample sets.

Now, excessive speed makes any accident more violent, but does it specifically cause the accident in the first place? Take a guess at what percentage of accidents the TRL actually found were caused directly by speeding? 40%? 30%? (It’s interesting how high people’s initial guesses will go, indicating a high level of penetration for the government’s “speed kills” message) In actual fact, the rate was around 4%. If you then factored in “loss of control” accidents such as black ice, wheels coming off, etc. the percentage of accidents where speed was a contributory factor was under 2%. The government’s own research proved, quite tellingly, that the highest cause of accidents on the road is quite simply what police forces lump under the catch-all definition of “driving without due care and attention” – failure to judge path or speed of other road users, inattention to approaching vehicles, failing to spot a hazard, etc.

Does the 1.9% justify the increased usage of speed cameras? Probably not, but police forces are still expanding their camera presence, and it’s mainly because of revenue, not safety. Speed cameras can generate thousands of pounds an hour when active, and several individual cameras in Britain posted earnings of well over the £1 million mark last year! But the TRL also did a study on speed cameras, and one of the things it looked at, amongst many others, was the presence of speed cameras and their effect on accidents. And what did the statistics come up with? Shockingly, precisely the opposite of what the anti-speed campaigners were hoping for – they actually found that speed cameras made accidents more, not less, likely to occur. At road works, the accident rate increased by a mammoth 55% with the presence of speed cameras. On open motorway, the cameras increased it by a not-inconsiderable 32%. This study was done over a period of eighteen months and covered 4.1 million vehicle kilometres – a pretty large and comprehensive sample set.

Before I go off on a real rant, let’s get back to the point I was trying to make. IT is the same, in that much of the time, it is very difficult to collect, collate and compare operational metrics, for reasons of time, skills and resource. The British public were lucky that an organization like the TRL was commissioned to pull together the statistics for road safety (even if its findings were unceremoniously ditched for coming up with the “wrong” conclusions!) The IT world isn’t as politically motivated, but what it does have in common is that we’re often left to our own devices when it comes to gathering the numbers required for monitoring performance, testing systems and building business cases. If you get inaccurate data or metrics, then you’re going to be in trouble.

So what we need is good, relevant, concise data about our environments, and we need it delivered without a huge overhead in terms of skills and management that disrupts our daily workloads. And if we can get data delivered of this quality in this way, we can then make proper informed decisions about the latest buzz we’re hearing in the IT community. Such as people like me telling everyone that FSLogix is the solution to performance issues on Office365 and dealing with the whole Windows profile in general 🙂

Is there a way we can get data of this quality, focused to our specific environmental requirements, without the huge effort?

Well, there are a plethora of monitoring solutions in the world. But the problem I generally find with them is that they all involve a significant investment in setup, learning and ongoing tuning. It’s almost like asking the general public to do the TRL’s research themselves – it can be a huge task. And if you don’t have a deep understanding of the internals of modern computer systems, then you’re going to be in the position of possibly looking in the wrong places for your data anyway, much like when we concentrated on CO2 as the harmful exhaust emission gas without taking into account all of the other poisons being spewed out.

This is where – yes, I’ve got to my point eventually – a company called Insentra comes in, with an offering called Predictive Insights and Analytics (PIA for short). I’m so glad they didn’t call it Predictive Insights, Trends and Analytics 🙂

The importance of metrics

Many – I’d say up to 85% – of projects I’ve worked on recently have little or no metrics from right across the environment. Metrics that they are monitoring (if any) are infrastructure-focused – networks, storage, databases. But in today’s world it’s the holistic view, the components that contribute to end-user experience, that need to be monitored. Very few places monitor the entire estate with the same level of detail reserved for back-end infrastructure components.

And even though there are great technologies out there that can provide this level of monitoring across the entire estate, there are problems. Technology like Lakeside SysTrack offers unparalleled visibility into your environment, but it needs to be purchased, implemented, supported, and continually tuned. This is a not-insignificant investment of time and resource – and as such there needs to be a compelling business case that can justify the investment beyond the scope of the current project. This is often the stage where interest fades – companies love the technology, they see the need, but they struggle to build the business case that will turn interest into investment.

Insentra’s PIA offering ticks a number of boxes that will make it so much easier. Firstly, they build the dashboards for you, using their own tech on top of a base Lakeside SysTrack core, and can tailor this to as many – or as few – metrics as you require. Secondly, it can all be cloud-based (although it doesn’t have to be), reducing the overhead of deploying the solution either as a PoC or in production. Thirdly, you can consume the offering for a specific period of time, so if you just need to invest in a monitoring solution for the specific duration of a project, it doesn’t have to be something you’re signing up to pay for on a continual basis. But the most important point is that monitoring doesn’t become an ongoing headache for the IT department – it just works. And that frees your staff up to innovate, to find new ways for IT to make the business more profitable and productive – not leave them fighting fires all of the time.

Combining features of FSLogix and Insentra gives you a lot of freedom to concentrate on developing IT services and enabling your users. Management complexity of applications and profiles is stripped away, performance problems with the Office365 suite are removed, and you get proactive alerts on the health of your environment and continual justification of whether your infrastructure is performing as expected. You don’t need to build a business case – the service itself can build it for you 🙂

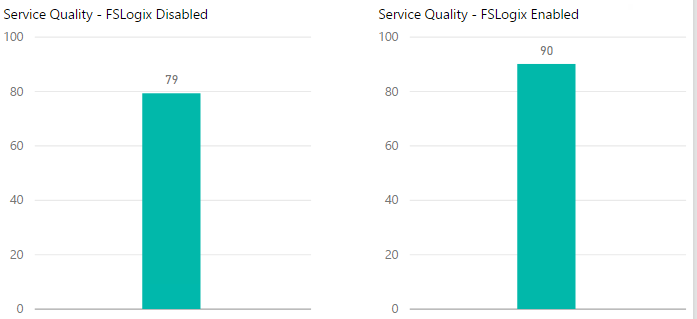

We can easily spit out this “auto-justification” by pointing the PIA engine at FSLogix itself to find out exactly how much of a business benefit you’re getting. What PIA also calculates is a cumulative metric called “service quality” which is an attempt to quantify that metric that is so important to every enterprise out there, the sometime intangible known as “user experience”.

Insentra did what they do, and collated custom dashboards that run within their managed service to provide information on KPIs for systems both with and without the FSLogix solutions installed. There was no need for any learning of the product on our part – we simply told Insentra what we wanted, and they came back with the dashboards for us to plug our test systems into. As easy as that – one agent installation, and we are good to go, and now we can define the KPIs we want to see on our dashboards.

With regard to understanding Outlook performance both with and without FSLogix in use, we plumped for three fairly straightforward indicators.

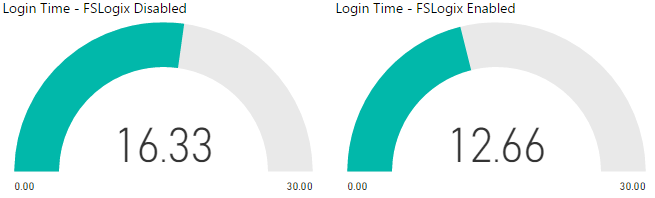

- Logon times – this is usually the #1 KPI of your average user, so a very pertinent metric to measure

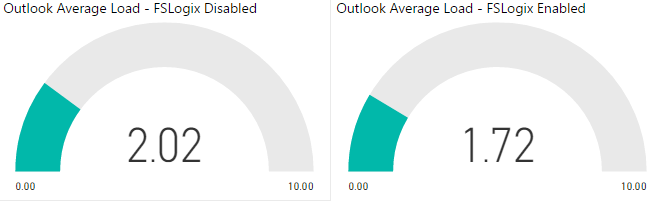

- Outlook launch times – especially in non-persistent environments, ensuring solid launch times of key applications is also vital, and email is one of the most commonly-used ones out there

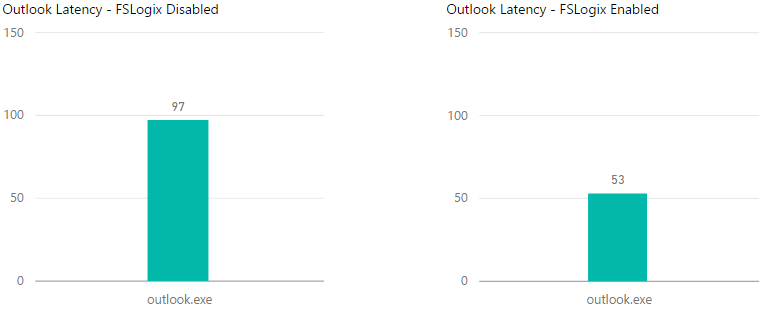

- Outlook latency – we need to measure the performance of our key applications in-session, so this KPI is appropriate because it measures the latency between the Outlook client and any other system outside of the session. Because FSLogix maps a VHD, this should be seen as “local” to the session and show lower latency, which would translate into better application performance

The stats

Here are some of the insights that PIA provided for us with regard to FSLogix, and this is an example of just how Insentra and FSLogix together could also work for your enterprise.

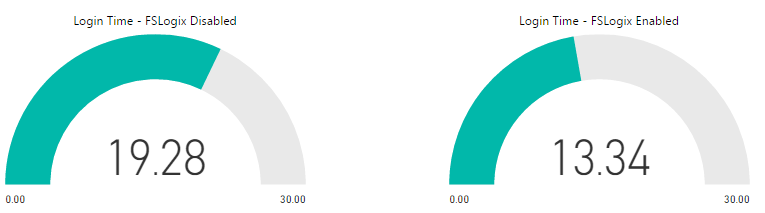

Lab 1 – XenApp 7.6 published desktops on Server 2012 R2, 200 users over 5 day monitoring period

Logon times

We can see a full 31% improvement in the logon time KPI.

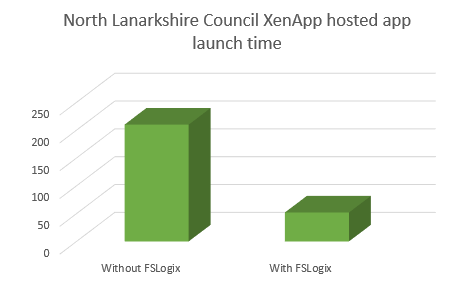

Outlook launch times

There is a 51% improvement in Outlook launch time KPI.

Outlook latency

45% improvement with regard to the Outlook latency KPI.

Service quality / user experience

There was an overall improvement of 14% in total service quality, and within this increase, we observed most of the improvements around disk and latency.

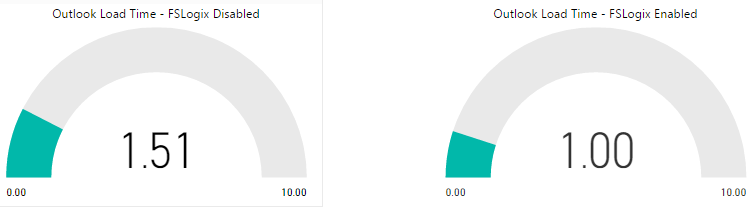

Lab 2 – Microsoft RDSH published desktops on Server 2012 R2, 450 users over 5 day monitoring period

Logon times

There was a 23% improvement in the logon time KPI.

Outlook load times

Outlook load time KPI was improved by 23%.

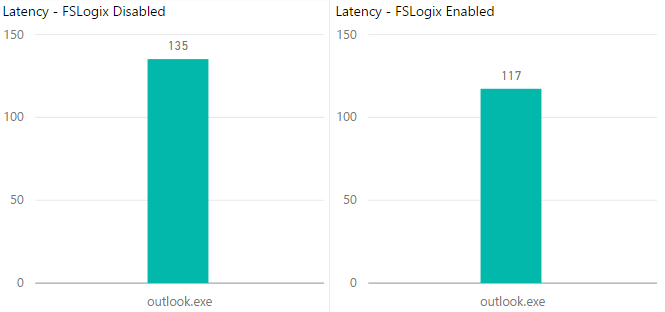

Outlook latency

Latency KPI showed an improvement of 13%.

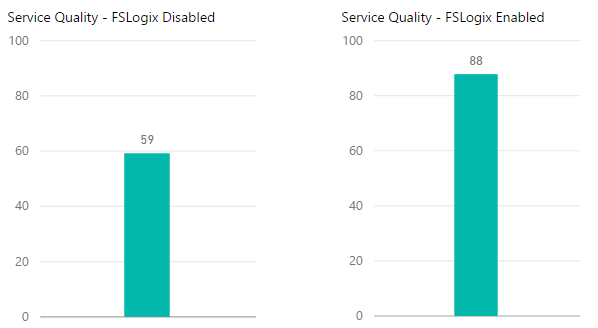

Service quality / user experience

The overall service quality / user experience metric improved by 49%.

So now we’ve got all the stats, it’s up to us how we interpret them, but what we can see is that with the FSLogix solution enabled we have improvements in all of our key performance indicators. Given that lab environments with test users are often quite simple, it’s important that we see improvement, because that can only increase when it is scaled to large numbers of “real” users. Interestingly, the improvement in service quality (49%) on RDSH was way higher than that viewed on XenApp (13%). This is probably because XenApp itself makes some improvements around the handling of system resources and the user experience, making it much less of a marked increase when FSLogix was applied to a XenApp endpoint. But XenApp or not, we can see that the performance is better in every area, which is an important first step in building out our business case.

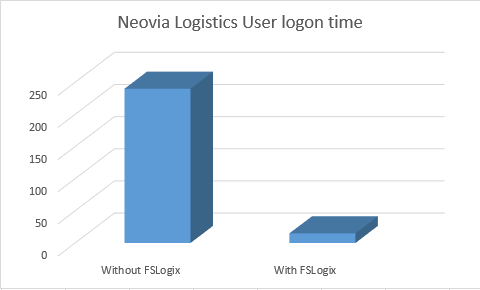

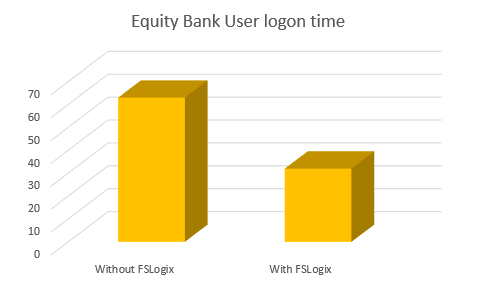

The real world and “user entropy”

Once you move away from test environments – which are by their nature very clean and uniform – into the “real” world, you start to see more noticeable improvements. Some of the FSLogix guys refer to this phenomenon as “Windows rot”, the unpredictable nature of endpoints once they start layering swathes of applications and processes on top of the underlying system. I prefer the term “user entropy” – the decline into disorder you get as users are let loose on the provisioned environment. Whichever term you prefer, it definitely is something you will become only too familiar with. Take a look at the before-and-after statistics we’ve collected in this way with a real-world production deployment of the FSLogix solution…

…and you can see what we are talking about. We can observe percentage improvements of between 50% and a mammoth 95% in the customer-designated KPIs. That is the sort of incredible improvement that makes a huge difference not just in productivity but also user faith in the enterprise environment, and more than justifies the effort put into the solution which has made this possible.

Summary

In much the same way as the challenges from members of the public to justify the “war on speed” made the government commission big surveys from the TRL, IT departments need to be challenging software vendors to show exactly how their solutions make the improvements that they’re claiming as benefits.

But we want to throw that challenge back the customer way a little bit. We are going to challenge you to put FSLogix’s software and Insentra’s PIA service together in your environment, on a small scale or large, and have a look at the benefits that you get. If you don’t see those improvements, then lift the solutions back out. It’s a simple as that.

What you will get is:-

- Simplified management of applications and profiles

- Improvement of in-session performance and user experience

- A solution to the common problems of Office365 deployments (such as poor performance of Outlook, Skype for Business and OneDrive)

- Monitoring of specific KPIs customized to your enterprise’s needs

- A cloud or on-premises based monitoring solution that requires no infrastructure, training or specialist skills

- Proactive alerting of issues within your environment

- The potential to back up business cases with real-time data and analytics

- More time for IT teams to spend on innovating and enabling the business

If you don’t see these improvements, then you simply don’t continue to consume the services or use the software. Straightforward, simple, no strings attached. And that’s the way modern IT environments should be.

So to draw the last drop of blood out of my motoring analogy, you want your IT environment to go from being something like this…

…to something like this…(although, it should be stressed, not with a comparable price tag!)

In my humble opinion, adopting technologies like those of FSLogix alongside services like Insentra’s PIA is a large step forward to achieving this level of enterprise nirvana. It’s a win-win – what’s stopping you getting involved?

You can find out more about Predictive Insights and Analytics at http://www.insentra.com.au/predictive-insights-and-analytics/